Headless puppies, fishing nets, and G&Ts – humanity’s millennia-long war against malaria

This week the WHO recommended the world’s first malaria vaccine for general use, opening a new chapter in the story of humanity’s fight against this ancient disease.

- 7 October 2021

- 7 min read

- by Maya Prabhu

In the middle fifth century CE on a hill overlooking the Tiber, a community hastily buried 49 children, tucking sometimes five or six infants into one grave. Around the little bodies, they arranged strange talismans: the headless skeletons of several puppies, a raven’s talon. One child would be unearthed more than 1,500 years later with a stone the size of a large bird’s egg still wedging the jaw bones wide open: a “vampire burial”, according to archaeologists, who believe the stone was intended to keep the dead from rising and spreading disease.

Researchers working on the site, the Necropoli dei Bambini in Lugnano, Italy, 70 miles north of Rome, describe the ritual objects as evidence of helpless terror. “Village witchcraft,” says Dr David Soren, a leading scholar of the graveyard – the desperate, pagan propitiations of a newly Christian people overwhelmed by an epidemic killer.

Efforts to stave off the malaria parasite – rather than cure the infection – have usually targeted mosquito control. Sometimes, that has meant erecting barriers.

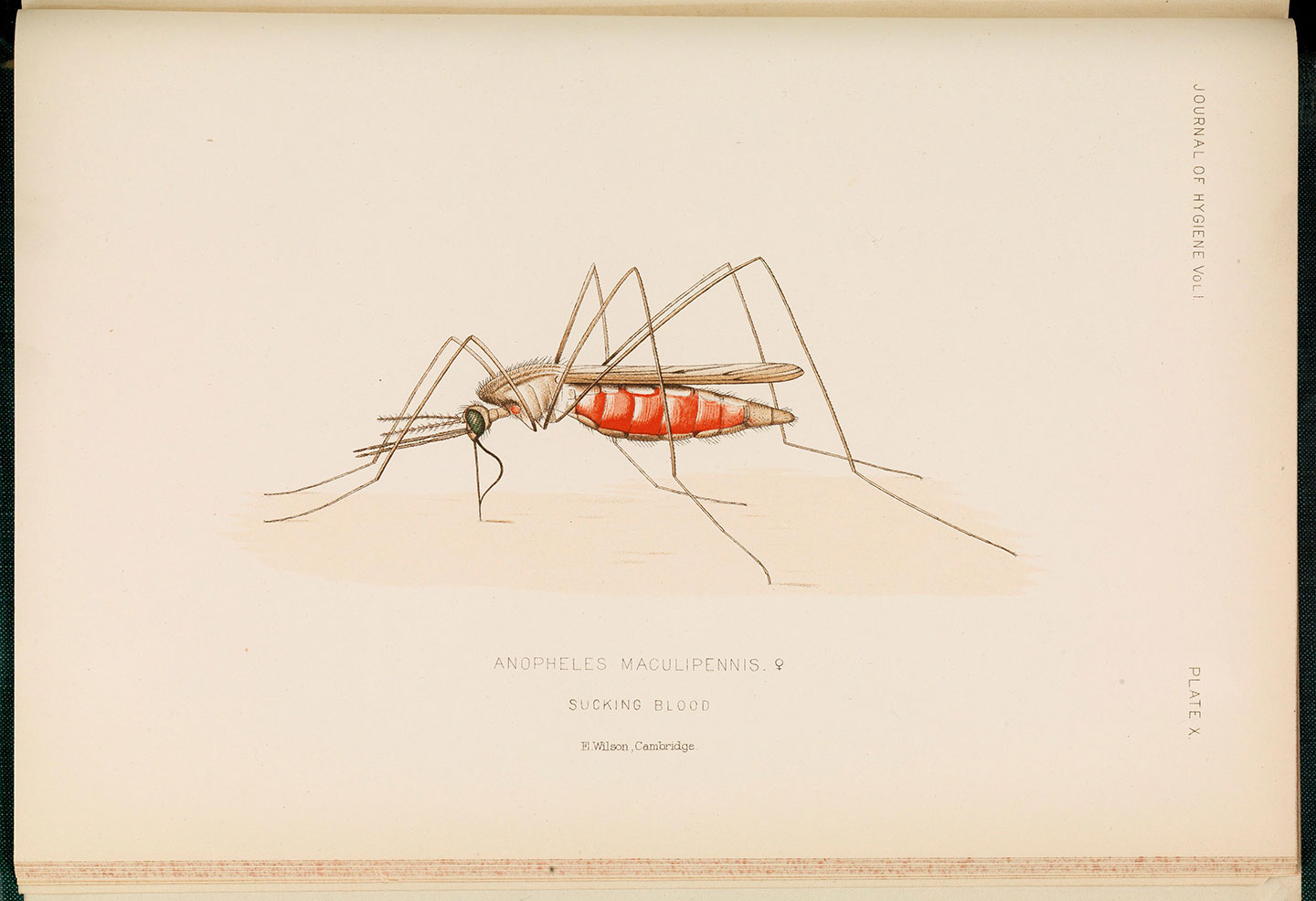

In literature, the marshland surrounding Rome has often been described as unwholesome, swathed in noxious, fever-inducing vapours: “bad air” or, in Italian, mal’aria. By the time that science had understood1 that it was actually the insects of the wetlands – specifically, female Anopheles mosquitoes – and not their gases that disseminated the deadly infections, the name had stuck.

Credit: Wellcome Collection. Attribution 4.0 International (CC BY 4.0)

Malaria was an early suspect in the deaths of the infant skeletons of Lugnano: the setting fit; so did a tell-tale pattern of pitting in the children's bones. And even today, the disease is deadliest for young children: more than 60% of the 400,000 people malaria kills each year are aged under five.

Then, in 2001, a team of scientists isolated samples of DNA from the leg bones of a Lugnano skeleton and confirmed the speculative diagnosis: the child had indeed been infected with Plasmodium falciparum, still the most virulent strain of the malaria parasite in circulation today. The results “tend to confirm the archaeologists' hypothesis that [malaria] was the cause of the Lugnano epidemic,” wrote the researchers.

It was then the oldest, direct, definitive evidence of malaria infection in a human skeleton, but in fact, the saga of humans and malaria is much older2 than the story nailed down by DNA proof. A set of Sumerian tablets from 3200 BC “unquestionably describe malarious fevers” and credit them to Nergal, a mosquito-looking god of the underworld, writes historian Timothy C. Winegard in his book The Mosquito.

Have you read?

Millennia before the Lugnano outbreak, Egypt’s fortunes took a historic turn when King Tutankhamun died of a falciparum infection in around 1324 BC. Ancient Chinese and Indian medical books describe the periodically remitting pattern of malarial fevers, pinpointing the classic symptom of an enlarged spleen. In the monsoon of 326 BCE, the apparently undefeatable army of Alexander the Great surged into the Indian subcontinent, and, along the humid, mosquito-humming shores of the Indus River system, began to sicken. On their slow retreat in the direction of Macedonia, Alexander himself got sick. His fever burned in falciparum-esque cycles for 12 days, and then he died.

Iconographic Collections Keywords: Tutankhamen

We have been seeking – by evolution, avoidance, medicine and magic – to control malaria for nearly as long as it has been stalking us. Scientists at the University of Rome have found evidence that the falciparum parasites that ravaged Lugnano in the fifth century (against which the talismanic headless puppies presumably had little effect) had travelled to the Tiber’s mosquito-infested marshes from Africa via Sardinia. Many African communities, however, would have been far better protected than their hard-hit Italian counterparts, because of a heritable genetic mutation which had first appeared there 7,300 years ago.

The sickle-cell trait, which Winegard calls “our immediate evolutionary counteroffensive” to falciparum malaria, offers 90% immunity to carriers, of which there are about 50-60 million alive today. People who inherit two sickle-cell genes, however, suffer excruciating, often life-limiting sickle-cell anaemia3.

Sickle cell anaemia is only one of the immunological ramparts we’ve developed against malaria over the ages. Thalassaemia, for instance, offers 50% protection against vivax malaria, and has left traces in skeletons 7,000 years old, while “Duffy negativity” renders a person impervious to both vivax and knowlesi strains, but also correlates with higher vulnerability to other health conditions like pneumonia and certain cancers. Acquired immunity triggered by non-fatal exposure accounts for the relatively low mortality rates in adults. And still, the persistent menace of malaria over the ages has induced us to forge a varied arsenal of supplementary weapons against both the Anopheles mosquito and the parasite it transmits. Some of them have even worked.

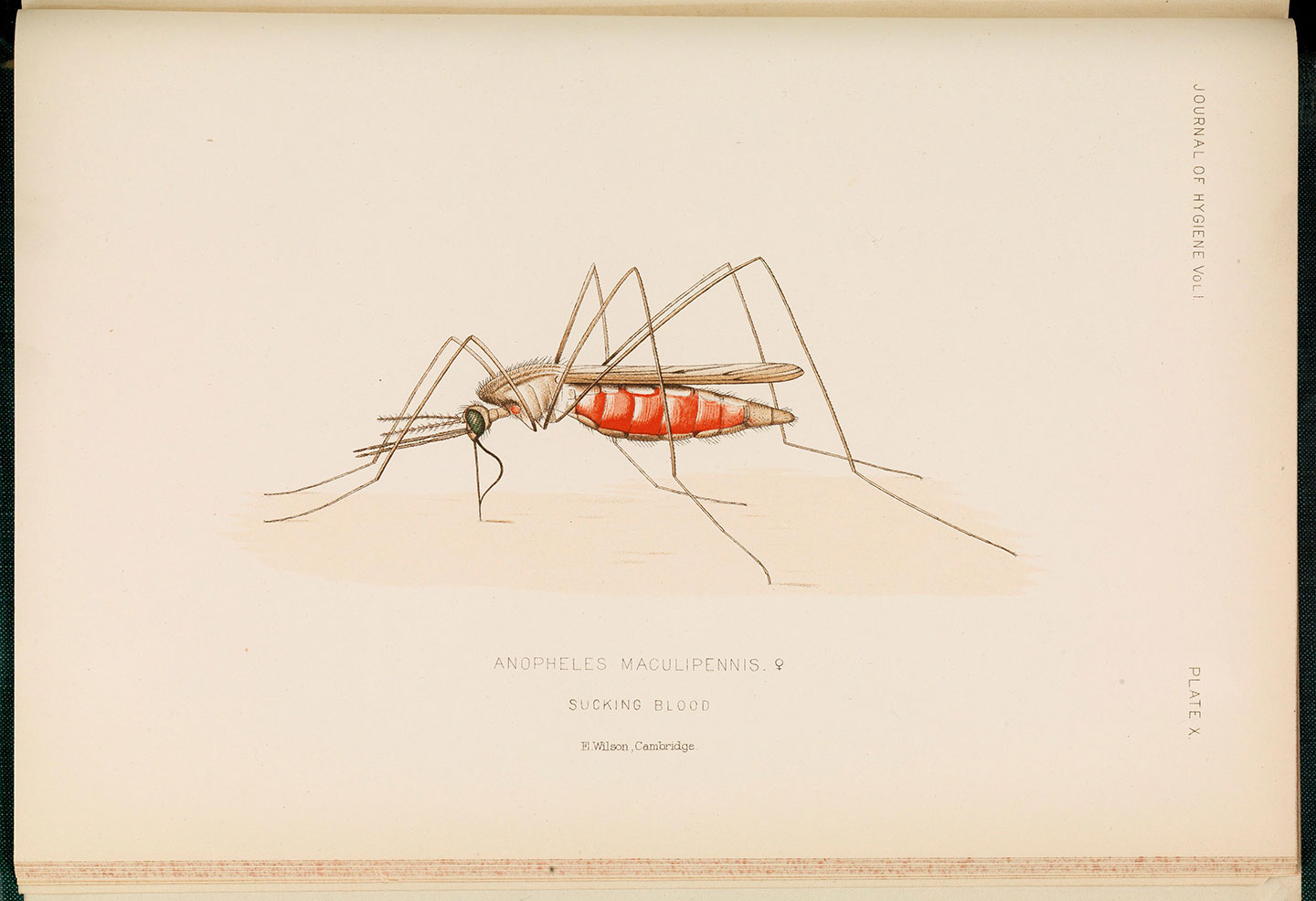

In fact, it’s striking how long versions of certain important anti-malarials have been in use. Artemisinin, used against falciparum infections, is derived from sweet wormwood, which was recommended in the treatment of chills and fevers in China nearly 2,000 years ago. Long before people knew why or how it worked – those discoveries were only made in the mid-1970s – people knew that it did. Similarly, though quinine was isolated as a compound only in 1820, it was the active ingredient in a medicinal infusion of chinchona tree bark commonly attributed to Jesuit missionaries in 17th century Peru, and actually used earlier than that by indigenous people in the region. Its impact and method of delivery changed – famously, the favourite quinine vehicle of British colonials in India was the gin and tonic – but it was not a fundamentally new drug.

Credit: Wellcome Collection. Public Domain Mark

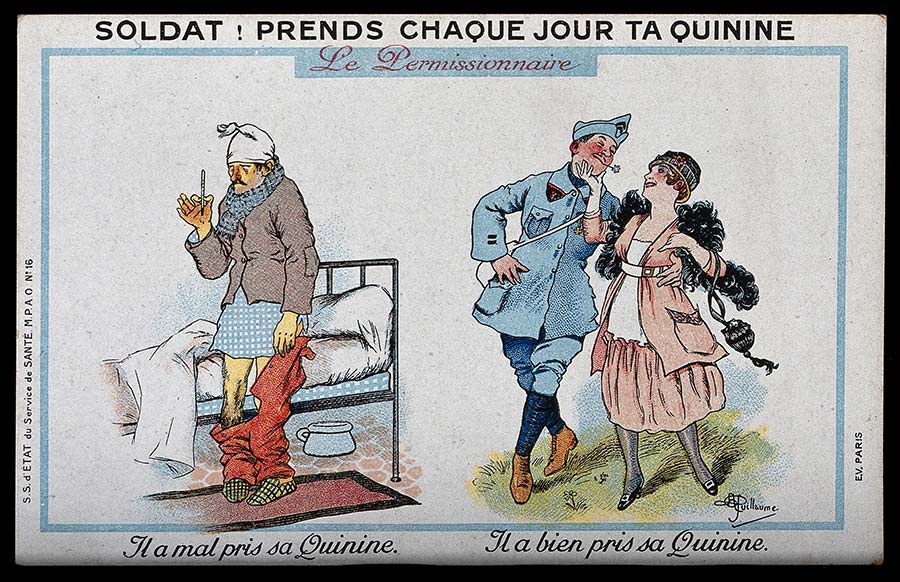

Efforts to stave off the malaria parasite – rather than cure the infection – have usually targeted mosquito control. Sometimes, that has meant erecting barriers. Chemically treated bed-nets are an effective tool today, with studies suggesting they can save between 39% and 62% of malaria’s victims from infection. Even they have ancient forebears: Herodotus described Egyptians who lived in marshland wrapping themselves in fishing nets – though whether they understood that they were saving themselves from fatal fever, or were simply warding off irritating insects, is more difficult to establish.

Credit: Wellcome Collection. Public Domain Mark

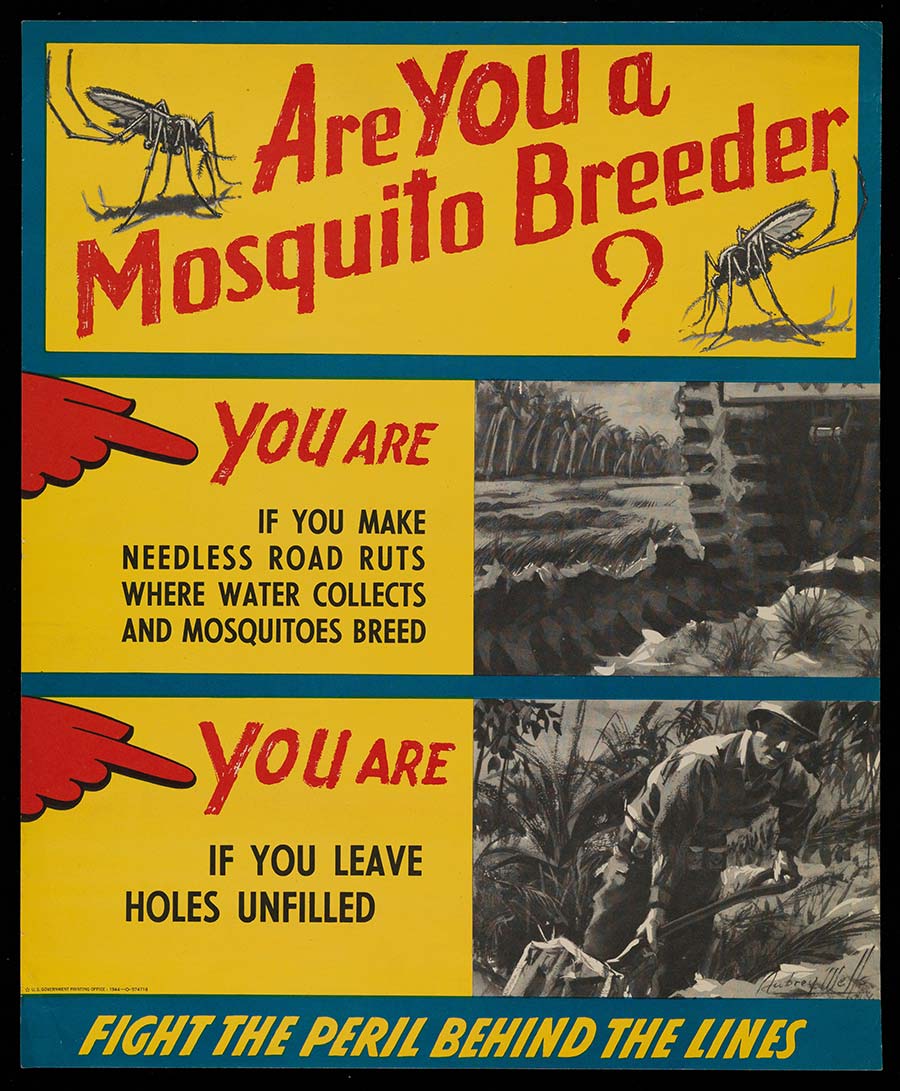

Often, mosquito control means habitat-management. Emperor Nero drained the swamps near Rome; ages later, in the 19th century, Giuseppe Garibaldi tried to divert the Tiber out of the still-malarial city. Italy’s relationship with the bug changed decisively only in the post-World War II era, when a series of malaria-control campaigns using the insecticide DDT made extraordinary headway in a number of countries. The USA eradicated malaria in 1951; Italy joined the ranks of the malaria-free in 1970. Before it was realised that DDT caused environmental devastation, malaria had almost been wiped out in South Africa, Sri Lanka and Mozambique.

Credit: Wellcome Collection. In copyright

Like our evolutionary defences, our patchwork of human-designed tools against malarial infection have offered us meaningful, but always only partial protection. Extraordinary progress has been made, but the bug has – so far – defied a simple fix. An inoculation has been a missing puzzle-piece: vaccines against parasites are notoriously difficult to develop. This week’s WHO recommendation of the RTS,S malaria vaccine – the world’s first – is far from a silver bullet, but it is the beginning of an important new chapter in our millennia-long battle against this ancient disease.